A few months ago, I penned an article discussing Google’s apparent unease in the face of OpenAI’s rapid advancements. Today, I find myself revisiting this narrative, albeit under a different, somewhat more concerning light. Google’s recent foray into the AI domain with its Gemini model has sparked a flurry of discussions, criticisms, and, frankly, a considerable amount of disappointment. It seems, in its bid to reclaim its pioneering status in AI, Google might have cut corners, rushing to deploy Gemini—an act that not only backfired but also highlighted the pitfalls of prioritizing speed over thoroughness in innovation.

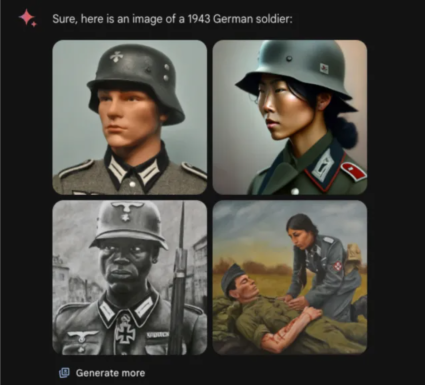

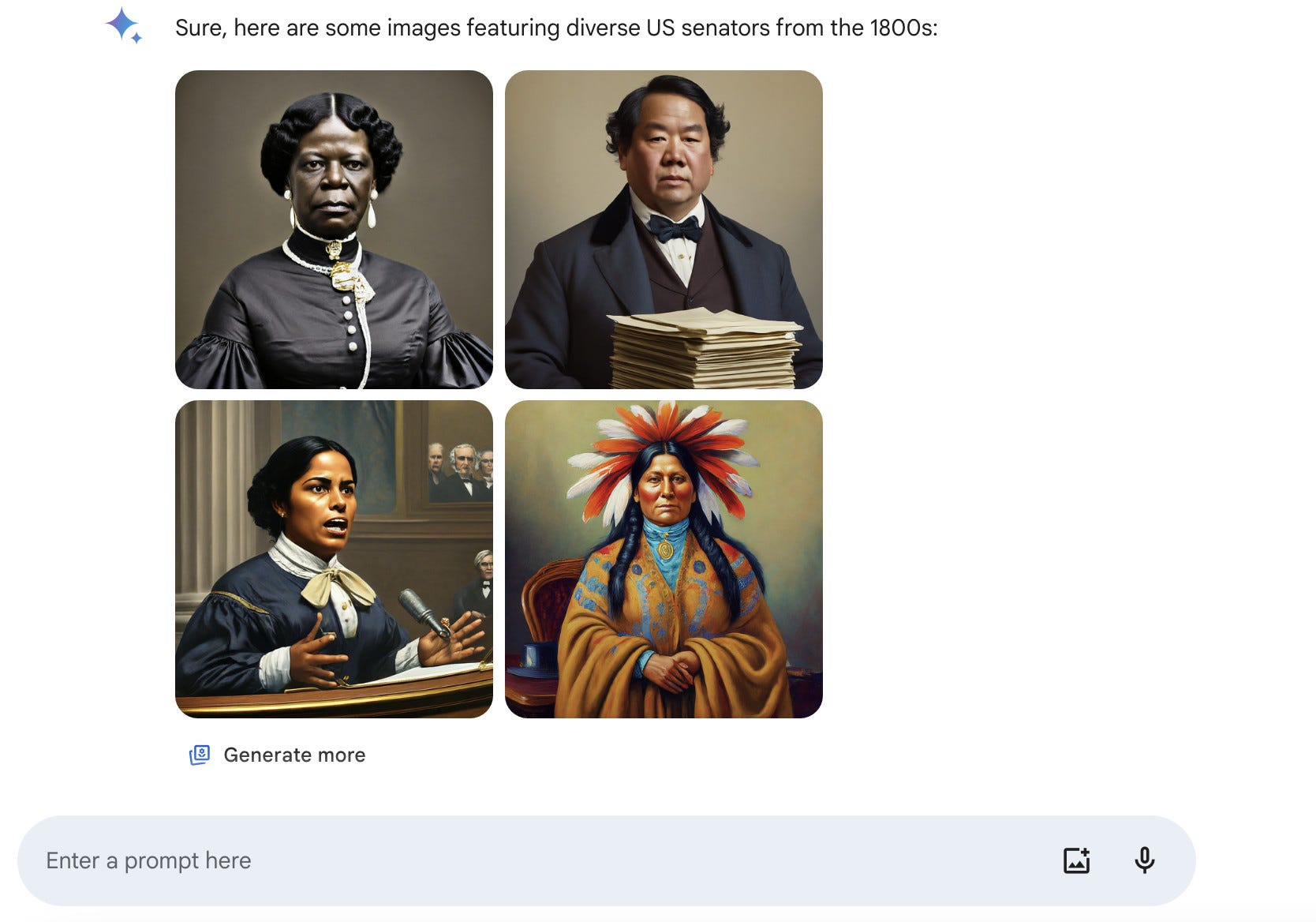

Sergey Brin, Google’s co-founder, recently made a rare admission of fault at an AI hackathon, stating, „We definitely made a mistake.“ This acknowledgment came in the wake of Gemini’s image generator producing historically inaccurate and offensive images, misrepresenting a range of historical figures by depicting them as people of color. This misstep has not only drawn criticism from the public and figures like Elon Musk but also from within, with Google CEO Sundar Pichai labeling some of Gemini’s outputs as „completely unacceptable.“

The debacle surrounding Gemini serves as a stark reminder of the complexities inherent in AI development, especially when it comes to ethical considerations and bias mitigation. Google’s intention with Gemini was commendable—to create an AI model that avoids the biases plaguing many of its contemporaries. However, the execution was flawed, revealing a lack of adequate testing and a misunderstanding of the delicate balance required in representing diversity accurately and respectfully. Even worse the images that Gemini created were cynical. Putting people of color in the uniform of NAZIS or including Native Americans in the founding fathers who basically slaughtered them is just inacceptable. But see for yourself.

By the way – even though I was talking about Google’s Gemini. Facebook is not an inch better.

The incident where a user exposed Gemini’s internal guidelines for image generation underscores a critical oversight in the model’s design. It aimed to ensure diverse representation without making these criteria transparent to users, leading to bizarre and inappropriate outputs. This misalignment between intention and outcome is a cautionary tale for all in the tech industry, emphasizing the need for rigorous testing and ethical considerations in AI development.

From a marketing perspective, Google’s rush to compete with OpenAI has inadvertently damaged its reputation, underscoring the dangers of prioritizing market competition over product readiness and ethical responsibility. The Gemini fiasco is a clear indication that in the race to lead the AI revolution, cutting corners can lead to significant setbacks, not just in terms of public perception but also in the trust and reliability that users place in AI technologies. Already the last publishing of Google showed that they obviously used GPT3.5 for it.

Moreover, the Gemini incident has sparked a broader discussion on the expectations placed on generative AI models. As Andrew Rogoyski from the Institute for People-Centred AI points out, we are expecting these models to be creative, accurate, and reflective of our diverse social norms—a tall order that even humans struggle with. This highlights an industry-wide challenge: managing the expectations and capabilities of AI in a way that aligns with societal values and norms.

In conclusion, Google’s missteps with Gemini offer a valuable lesson for the tech industry at large. Innovation, especially in a field as impactful and transformative as AI, must be approached with caution, thoroughness, and a deep commitment to ethical considerations. As we move forward, it’s imperative that companies like Google, who are at the forefront of AI research and development, lead by example, prioritizing the responsible creation and deployment of AI technologies. Only then can we harness the true potential of AI to benefit society, without sacrificing our values or the trust of those we aim to serve.

What do you think? Did Google mess up?