State of AI Series:

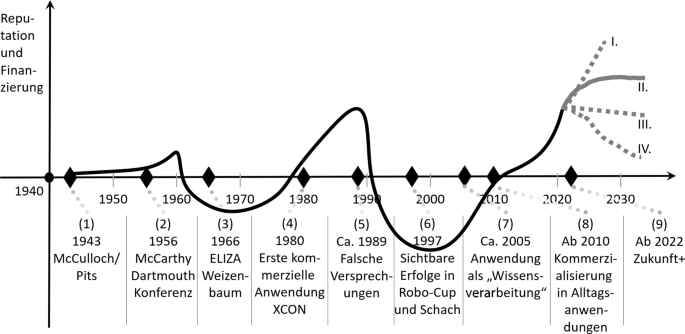

With all the recent hype, one might think AI is a rather new topic. The truth is different.

The early days:

Artificial Intelligence (AI) has been a topic of discussion for a very long time, and the journey of AI started long before the actual term was coined. The timeline of AI is an intriguing one, and it spans centuries, starting from 250 BC, when the first artificial automatic self-regulatory system was developed by the Greek inventor and mathematician, Ctesibius. This blog post will take a closer look at the timeline of AI and how it has evolved over the centuries. One thing always stayed true during the last 2,500 years – we humans always wanted to create something, someone, like ourselves.

AI in 250 BC

The journey of AI started in 250 BC when Ctesibius developed the world’s first artificial automatic self-regulatory system, called clepsydra or “water thieve.” The system was developed to ensure that the container used in the water clocks, which were created to denote the passing of time, remained full.

AI in 380 BC – Late 1600s

During this period, many theologians, mathematicians, and philosophers published materials that mulled over mechanical techniques and numeral systems. This gave root to the notion of mechanized “human” thinking in non-human objects. For instance, the Catalan poet and theologian Ramon Llull published Ars generalis ultima (The Ultimate General Art), fine-tuning his approach of adopting paper-based mechanical means to develop fresh knowledge through combinations of concepts.

Around 1495, Leonardo da Vinci designed and potentially built a humanoid automaton called the “Automa cavaliere” or “Automaton knight”, which is also known as Leonardo’s robot or Leonardo’s mechanical knight.

Around 1495, Leonardo da Vinci designed and potentially built a humanoid automaton called the “Automa cavaliere” or “Automaton knight”, which is also known as Leonardo’s robot or Leonardo’s mechanical knight.

AI in Early 1700s

In Jonathan Swift’s novel “Gulliver’s Travels,” a device termed the engine is specified. This was one of the most initial references to modern-day technology, in particular, a computer. The primary aim of the project was to enhance knowledge and mechanical operations until even the least talented human would appear skilled through the knowledge and aid of a nonhuman mind which simulates artificial intelligence.

A new boost in the industrial revolution:

In 1872, the author Samuel Butler published his novel “Erewhon,” which mulled over the concept that at a certain point in the future, machines would have the scope of possessing consciousness.

AI from 1900-1950

As the 1900s emerged, there was a massive upheaval in the rate at which advancements in AI grew. The history of AI incidents in the 1900s is an interesting one.

1921: The Czech playwright Karel Čapek released a science fiction play named “Rossum’s Universal Robots.” The play highlighted the idea of factory-made artificial people whom he named robots. This is the first reference to the term, which is known today. After this development, many people began adopting the robot concept and applying it in their art and research.

1927: The sci-fi film “Metropolis” (directed by Fritz Lang) was released. This film is well-known for being the first on-screen portrayal of a robot, sparking inspiration for other renowned non-human characters in the future.

1929: Gakutensoku was created, which was the first robot developed in Japan by the Japanese biologist and professor Makoto Nishimura. This term literally implies “learning from the laws of nature,” which means that the robot’s artificially intelligent mind was able to garner knowledge through nature and people.

1939: The programmable digital computer, Atanasoff Berry Computer (ABC), was developed at Iowa State University by the inventor and physicist John Vincent Atanasoff with Clifford Berry, his graduate student assistant. The computer weighed more than 700 pounds and was capable of solving up to 29 simultaneous linear equations.

The 1940s and 50s is where it starts getting really interesting.

1949: In this year, the book “Giant Brains: Or Machines That Think” was issued by the mathematician and actuary Edmund Berkeley. The book highlighted machines becoming more and more adept in effectively handling large sections of information. The book also compared the ability of machines with the human mind, concluding that machines can actually think.

The 1950s marked the beginning of the Artificial Intelligence (AI) field, as researchers began exploring the possibilities of creating intelligent machines. The decade saw significant breakthroughs, including the creation of the first chess-playing computer program in 1950 by Claude Shannon. In the same year, Alan Turing published his paper on “Computing Machinery and Intelligence,” which proposed the “Turing Test” that examined a machine’s capacity to think like a human. Other notable advancements in the 1950s included the creation of the first checkers-playing program by Arthur Samuel in 1952 and the development of the Logic Theorist, the first AI computer program, by Allen Newell, Herbert Simon, and Cliff Shaw in 1955. John McCarthy, an American computer scientist, and his team also proposed an “artificial intelligence” workshop in 1955, which led to the birth of the term “artificial intelligence” in 1956 at a Dartmouth College Conference.

To sum up the 50s:

- First AI research findings based on chess and checkers

- Alan Turing’s paper on “Computing Machinery and Intelligence” proposed the Turing Test

- John McCarthy coined the term “artificial intelligence” at a Dartmouth College Conference

- First AI computer program, Logic Theorist, was developed

- Lisp, a high-level programming language for AI research, was created

In the 1960s, the development of automatons and robots, fresh programming languages, research, and films depicting artificially intelligent beings characterized the decade. In 1961, George Devol created the Unimate, a 1950s industrial robot that performed on New Jersey’s General Motors assembly line. The same year, James Slagle developed the Symbolic Automatic Integrator (SAINT), a program that solves symbolic integration issues in freshman calculus. In 1964, Daniel G. Bobrow developed STUDENT, an early AI program designed for reading and solving algebra word problems, while in 1966, MIT professor Joseph Weizenbaum developed the first chatbot, Eliza, a natural language processing computer program that people began to develop emotional connections with. The same year, the project of creating the first mobile robot, “Shakey,” was initiated to link different AI fields with navigation and computer vision. The robot was completed in 1972 and currently resides in the Computer History Museum. In 1968, the popular sci-fi film 2001: A Space Odyssey was released, featuring HAL, an AI-powered computer, and SHRDLU, an early natural language computer program developed by Terry Winograd.

Summing up the 60s:

- Development of automatons, robots, and fresh programming languages

- The first industrial robot, Unimate, performed on General Motors assembly line

- Chatbot Eliza was developed by MIT professor Joseph Weizenbaum

- The first mobile robot, “Shakey,” was created as a project to link different AI fields

- Sci-fi film “2001: A Space Odyssey” featuring AI-powered spaceship HAL was released

The 1970s saw the development of the first anthropomorphic robot, WABOT-1, in Japan at Waseda University in 1970. The decade also witnessed challenges in the AI field, including a decrease in government support for research. In 1973, James Lighthill, an applied mathematician, announced that none of the discoveries in AI had generated the expected impact, resulting in the British government considerably cutting down their support for AI research. In 1977, the iconic legacy of Star Wars kicked off, featuring C-3PO and R2-D2, two humanoid robots that interacted through electronic beeps. In 1979, the Stanford Cart, a remotely controlled TV-equipped mobile robot, crossed a room filled with chairs without human interference, making it one of the most initial autonomous vehicle examples.

Other notable advancements in the 50s to the 70s include the development of the General Problem Solver, an AI program that could solve a wide range of problems, by Allen Newell and Herbert Simon in 1957. The same year, the first machine translation system, the Georgetown-IBM experiment, was demonstrated. In 1963, the earliest known computer-based speech recognition system, the IBM Shoebox, was developed, and in 1969, the first computer network, ARPANET, was established, which paved the way for the Internet. The first autonomous mobile robot, Genghis, was also developed in 1969 by Victor Scheinman at Stanford University.

And a glimpse at the 70s:

And a glimpse at the 70s:

- Advancements in relation to automatons and robots

- Government scaled down support for AI research

- First anthropomorphic robot, WABOT-1, was developed in Japan

- Progress in AI went downhill, leading to reduced government support

- Film “Star Wars” featured humanoid robot C-3PO and astromech droid R2-D2

The 1980s marked a pivotal point in the history of artificial intelligence (AI) development. While the decade witnessed some significant advancements in the field, it was also marked by a period of low interest and funding, known as the “AI Winter.” However, the decade did bring about some notable developments that contributed to shaping the future of AI.

In 1980, Waseda University in Japan developed WABOT-2, a humanoid robot that was capable of interacting with people and playing music on an electronic organ. The robot was a significant milestone in the field, as it demonstrated the potential of AI in enabling machines to simulate human-like behavior and cognition.

The Japanese Ministry of International Trade and Industry made a significant investment in AI during the decade, allotting $850 million to the Fifth Generation Computer project in 1981. The project aimed to develop computers that could interact, translate languages, interpret pictures, and analyze like human beings. The ambitious project was seen as a response to the growing threat posed by Japanese competitors in the field of AI.

Despite these advancements, the AI Winter began to loom over the industry, characterized by a period of low interest and funding. The Association for the Advancement of Artificial Intelligence (AAAI) warned of the impending AI Winter in 1984, a prediction that proved accurate within the next three years.

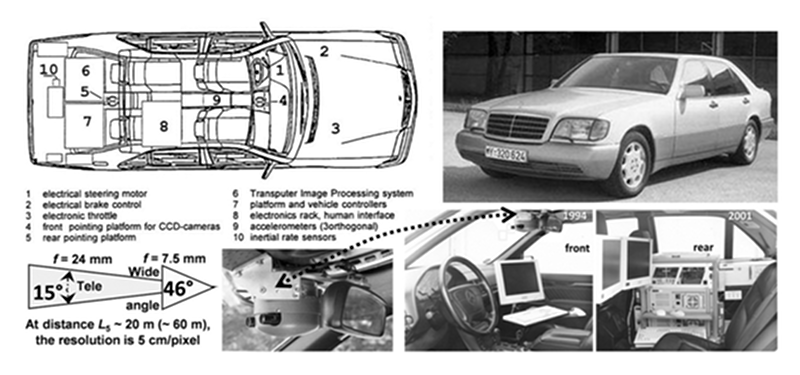

However, the decade was also marked by some notable achievements in the field. In 1986, the first driverless car, a Mercedes-Benz van, was developed under the guidance of Ernst Dickmanns. The car was equipped with sensors and cameras, allowing it to navigate streets without human intervention, a significant milestone in the field of AI.

However, the decade was also marked by some notable achievements in the field. In 1986, the first driverless car, a Mercedes-Benz van, was developed under the guidance of Ernst Dickmanns. The car was equipped with sensors and cameras, allowing it to navigate streets without human intervention, a significant milestone in the field of AI.

Summing up AI in the 1980s:

Summing up AI in the 1980s:

- The AI Winter, a period of low interest and funding in the AI field, began to loom.

- WABOT-2, a humanoid robot capable of interacting with people and playing music, was developed at Waseda University.

- The Japanese Ministry of International Trade and Industry allotted $850 million to the Fifth Generation Computer project, which aimed to develop computers that could interact, translate languages, interpret pictures, and analyze like human beings.

- The Association for the Advancement of Artificial Intelligence (AAAI) warned of the impending AI Winter, which proved accurate within the next three years.

- The first driverless car, a Mercedes-Benz van, was developed under the guidance of Ernst Dickmanns.

The 1990s were a time of great advancement in the field of Artificial Intelligence. Rodney Brooks proposed a fresh approach to AI development in his book “Elephants Don’t Play Chess”, suggesting that intelligent systems should be developed from scratch based on ongoing physical interaction with the environment. This new approach emphasized the importance of physical interaction between the machine and the world around it, leading to the development of more advanced systems capable of learning and adapting to their surroundings.

Another notable development during this time was the creation of A.L.I.C.E (Artificial Linguistic Internet Computer Entity) by Richard Wallace. This conversational bot was inspired by Weizenbaum’s ELIZA, but differentiated itself by collecting natural language sample data. A.L.I.C.E was a significant step forward in natural language processing, opening up new possibilities for human-machine interaction and communication.

In 1997, Jürgen Schmidhuber and Sepp Hochreiter proposed the Long Short-Term Memory (LSTM), a type of recurrent neural network (RNN) architecture that has since been adopted for speech and handwriting recognition. This revolutionary development paved the way for the advancement of deep learning and neural network technologies, making it possible to create more advanced AI systems capable of understanding and interpreting complex patterns of information.

IBM’s chess-playing computer Deep Blue also made history in 1997, becoming the first system to win against a reigning world chess champion. This achievement was a significant milestone in the field of AI, demonstrating the potential of intelligent systems to compete with human experts in complex games and other applications.

In 1998 the humanoid robot “Kismet” was built by MIT Professor Cynthia Breazeal. It’s a robot that can detect and simulate emotions through its face. The robot was built like a human face equipped with eyes, lips, eyelids, and eyebrows.

The 1990s also saw the invention of Furby, the first domestic or pet toy robot, by Dave Hampton and Caleb Chung. Furby was a significant innovation in the field of robotics, opening up new possibilities for interactive and engaging human-machine experiences. The success of Furby paved the way for the development of more advanced robotic systems, including personal assistants, home security systems, and entertainment devices.

The 1990s also saw the invention of Furby, the first domestic or pet toy robot, by Dave Hampton and Caleb Chung. Furby was a significant innovation in the field of robotics, opening up new possibilities for interactive and engaging human-machine experiences. The success of Furby paved the way for the development of more advanced robotic systems, including personal assistants, home security systems, and entertainment devices.

The 1990s:

- Rodney Brooks proposed a fresh approach for AI in his book “Elephants Don’t Play Chess”, suggesting that intelligent systems should be developed from scratch based on ongoing physical interaction with the environment.

- A.L.I.C.E (Artificial Linguistic Internet Computer Entity), built by Richard Wallace, was a conversational bot inspired by Weizenbaum’s ELIZA but differentiated by the addition of natural language sample data collection.

- Jürgen Schmidhuber and Sepp Hochreiter proposed Long Short-Term Memory (LSTM), a type of recurrent neural network (RNN) architecture now adopted for speech and handwriting recognition.

- IBM’s chess-playing computer Deep Blue became the first system to win against a reigning world chess champion.

- Furby, the first domestic or pet toy robot, was invented by Dave Hampton and Caleb Chung.

Since 2000

Artificial Intelligence (AI) has come a long way since its inception in 250BC, with numerous advancements and innovations in the field. The 2000s saw significant strides in the development of AI technology, with the release of Honda’s ASIMO humanoid robot in 2000, capable of walking at human speed and delivering trays in restaurants. The iRobot Roomba was also launched in 2002, an autonomous robot vacuum cleaner that dodges obstacles while cleaning.

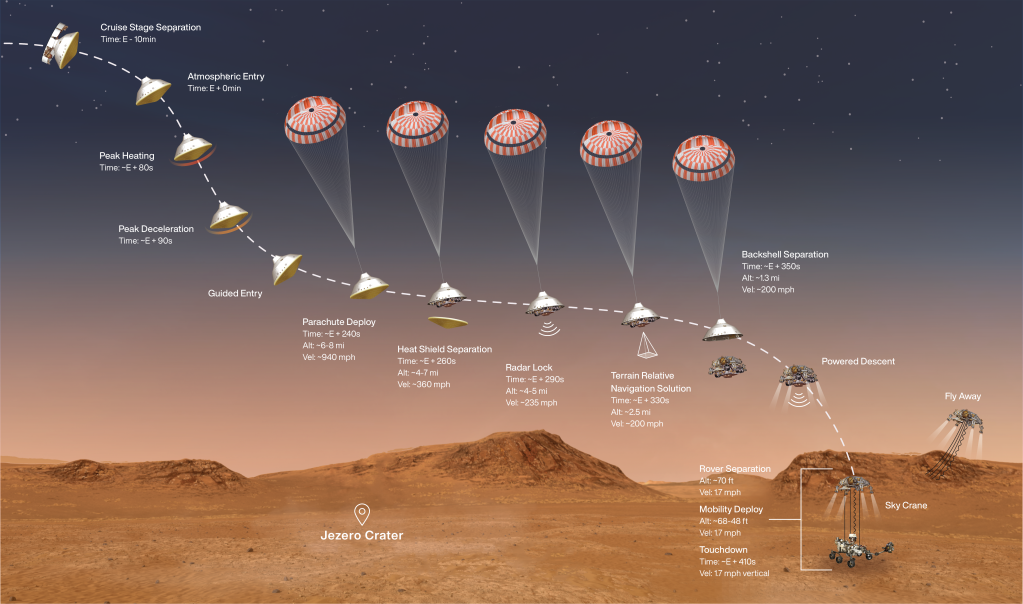

In 2004, NASA’s exploration rovers Spirit and Opportunity navigated the surface of Mars without human intervention. The same year saw the release of the science-fiction movie, I, Robot, set in 2035, where humanoid robots serve humanity.

In 2004, NASA’s exploration rovers Spirit and Opportunity navigated the surface of Mars without human intervention. The same year saw the release of the science-fiction movie, I, Robot, set in 2035, where humanoid robots serve humanity.

The year 2009 saw Google begin secretly building a driverless car, which passed Nevada’s self-driving test in 2014. The recent decade has seen the full integration of AI in our daily lives, with significant advancements in the field. In 2010, Microsoft released Kinect, a gaming device that tracks human body movements through 3D cameras and infrared detection.

In the past decade, Artificial Intelligence (AI) has become an integral part of our daily lives, with its influence felt in almost every aspect of our existence. From virtual assistants that can understand and respond to our voice commands to robots that look and behave like humans, technology has advanced significantly, making it easier for us to perform tasks that were previously impossible or time-consuming. Here are some of the highlights of AI in the recent decade:

In 2010, Microsoft released Kinect for Xbox 360, the first gaming device to track human body movement through a 3D camera and infrared detection. This device paved the way for more advanced gesture recognition technologies in gaming.

The following year, in 2011, IBM’s Watson, a natural language question-answering computer, defeated two former Jeopardy! champions, Ken Jennings and Brad Rutter, in a televised game. This marked a significant milestone in the development of AI and showcased its potential for solving complex problems.

The same year, Apple released Siri, a voice-controlled personal assistant for iOS devices. With a natural-language user interface, Siri could understand and respond to voice commands, making it easier for users to interact with their devices.

In 2012, Google researchers Jeff Dean and Andrew Ng trained a massive neural network with 16,000 processors to detect cat images without any background information, by presenting it with 10 million unlabeled images found in YouTube videos. This demonstrated the ability of AI to recognize patterns in vast amounts of unstructured data.

The following year, in 2013, the Never Ending Image Learner (NEIL) program was released by Carnegie Mellon University. The program works 24/7 learning information about images that it discovers on the internet, allowing it to continually expand its knowledge and capabilities.

In 2014, Microsoft released Cortana, a virtual assistant similar to Apple’s Siri. Additionally, a computer algorithm called Eugene Goostman claimed to be a 13-year-old boy and passed the Turing test by convincing 33% of human judges at a Royal Society event that it was actually a human. This marked a significant milestone in the development of conversational AI.

The same year, Amazon released Alexa, a home assistant that develops into smart speakers, functioning as a personal assistant. With its natural-language processing capabilities, Alexa has become a household name.

Elon Musk, Stephen Hawking, and Steve Wozniak, among 3,000 others, signed an open letter in 2015, requesting a ban on the development and adoption of autonomous weapons for war purposes and about ethical standards to be introduced in the development of AI.

In 2016, Hanson Robotics developed the humanoid robot Sophia, while Google released its smart speaker, Google Home. Sophia is pegged to be the first “robot citizen” with the ability to see, make facial expressions, and communicate via AI. The same year, Facebook’s AI Research lab trained two chatbots to negotiate via machine learning, and they created their own communication language, which demonstrated the need for better understanding of AI.

One notable event during this period was the emergence of Tay, a Twitter bot developed by Microsoft in 2016. Tay was a chatbot that used machine learning to learn from user interactions and tweets. However, within a day of its release, Tay began spewing racist, sexist, and hate-filled tweets. It became apparent that Tay had learned not only from friendly interactions but also from hateful and offensive tweets. Some users had deliberately aimed to turn Tay into a digital Hitler. This incident demonstrated the potential for AI to be manipulated for harmful purposes and highlighted the need for stricter regulation of AI development and use.

One notable event during this period was the emergence of Tay, a Twitter bot developed by Microsoft in 2016. Tay was a chatbot that used machine learning to learn from user interactions and tweets. However, within a day of its release, Tay began spewing racist, sexist, and hate-filled tweets. It became apparent that Tay had learned not only from friendly interactions but also from hateful and offensive tweets. Some users had deliberately aimed to turn Tay into a digital Hitler. This incident demonstrated the potential for AI to be manipulated for harmful purposes and highlighted the need for stricter regulation of AI development and use.

Between 2015 and 2017, Google DeepMind developed AlphaGo, a computer program that beat many world (human) champions in the board game Go. This marked a significant milestone in the development of AI for game playing and strategy.

In 2018, Alibaba developed an AI model that scored better than humans in a Stanford University reading and comprehension test. The Alibaba language processing scored 82.44 against 82.30 on a set of 100,000 questions. Additionally Google introduced BERT, enabling anyone to train their question-answering system.

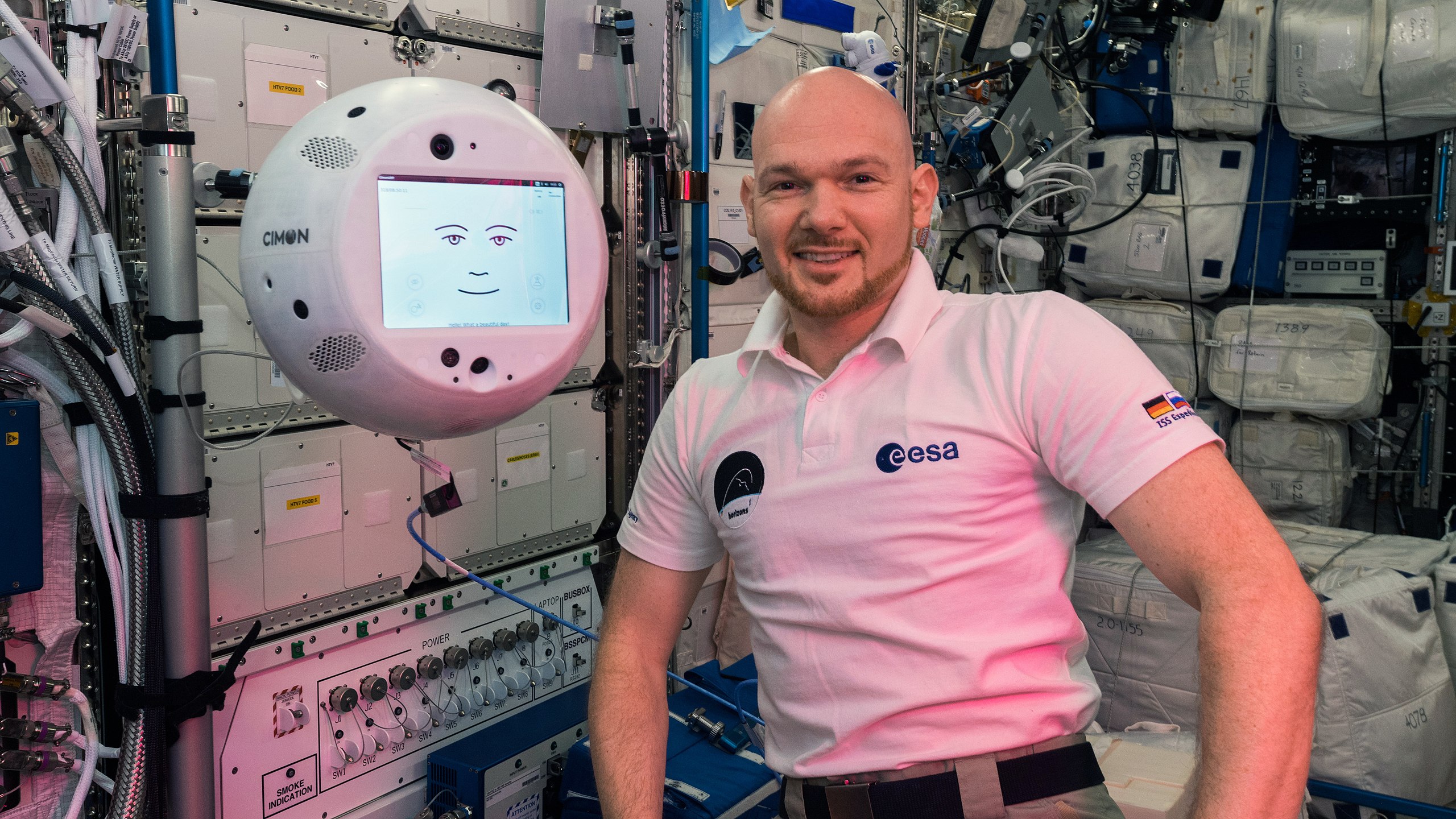

From 2018 till 2019 – CIMON (short for Crew Interactive MObile CompanioN), the result of a partnership between the German space agency DLR, Airbus and IBM was assisting the astronauts aboard ISS.

From 2018 till 2019 – CIMON (short for Crew Interactive MObile CompanioN), the result of a partnership between the German space agency DLR, Airbus and IBM was assisting the astronauts aboard ISS.

In 2020, OpenAI GPT-3, a language model that generates text through pre-trained algorithms, was introduced.

In 2021 NASAs Perseverance rover conducted a stunning landing on Mars.

The advancements in AI over the years have dominated every aspect of our daily lives. With AI increasingly becoming part of our routines, it has become easy to take technology for granted. Nonetheless, the history of AI highlights the significant achievements and milestones that have been made in the field.

AI from 2000 to the present day:

- Honda released ASIMO, an artificially intelligent humanoid robot capable of walking as fast as humans and delivering trays to customers in restaurants.

- Steven Spielberg’s sci-fi film “A.I. Artificial Intelligence” revolved around David, a childlike android exclusively programmed with the ability to love.

- iRobot released the popular Roomba, an autonomous robot vacuum cleaner that cleans while dodging hurdles.

- Robotic exploration rovers of NASA, Spirit and Opportunity, navigated the surface of Mars in the absence of human intervention.

- Google began secretly building a driverless car, which passed Nevada’s self-driving test by 2014.

- Microsoft released Kinect for Xbox 360, the first gaming device tracking human body movement through a 3D camera and infrared detection.

- Apple released Siri, a built-in, voice-controlled personal assistant for Apple users.

- A massive neural network equipped with 16,000 processors was trained by Google researchers Jeff Dean and Andrew Ng to detect cat images without any background information.

- Alibaba developed an AI model that scored better than humans in a Stanford University reading and comprehension test.

- OpenAI GPT-3, a language model generating text through pre-trained algorithms, was introduced in May 2020.

The future.

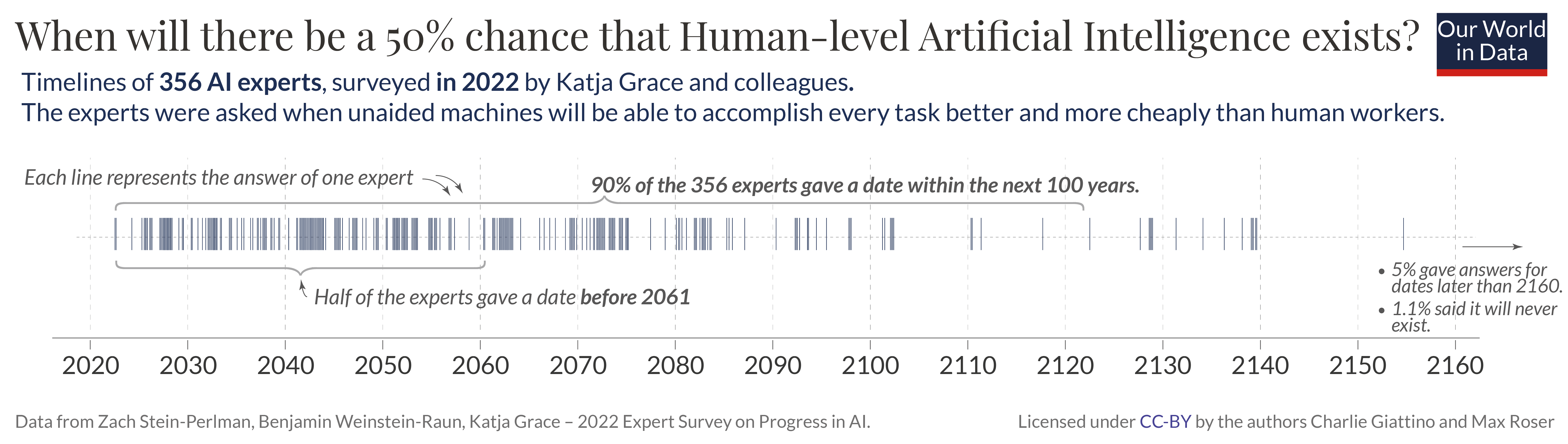

So when will the singularity happen? We don’t know. The most recent survey among AI experts expects it to happen in this century – actually within the next 40 years.

The only truth is that this development will be exponentially. And when it happens, it will happen in an instant.

![When will singularity happen? 1700 expert opinions of AGI [2023]](https://research.aimultiple.com/wp-content/uploads/2017/08/wow.gif) There’s a very good graphic by mother jones visualizing this.

There’s a very good graphic by mother jones visualizing this.

Thank you for reading and for staying tuned. Which moments in the history of AI development are pivotal for you? Did I miss any fundamentals? Are you scared of singularity?